Paraview mpi windows#

In order to create SSH tunnels to or from your Windows system, you will need additional software.

Paraview mpi mac#

While Mac and Linux systems typically have an SSH client and server installed by default, Windows systems do not. The commands will also change to include the appropriate cluster name, node number, and your username. The instructions may be different on the CZ and RZ networks. The command must be run on your local desktop in order to work Don't try To create a tunnel, run one of the following ON YOUR DESKTOP machine: You will need an SSH tunnel FROM your desktop TO this cluster.

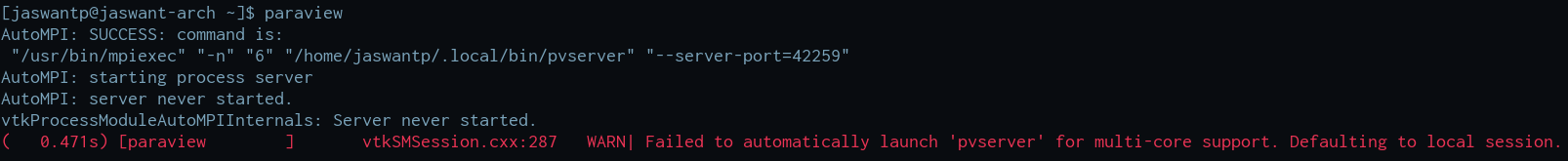

You are on the cluster 'foobar', presumably on the LLNL CZ network. Below is a sample of what those instructions might look like: PLEASE READ THIS PART CAREFULLY! IT MAY CHANGE FOR EACH MACHINE! The pvserver_parallel script will print out instructions for creating the necessary SSH tunnel. (Note that the -force-offscreen-rendering option is included automatically.)

You may also pass any options to pvserver_parallel which pvserver would take. Be aware that the number of cores per node may vary between clusters. For instance, if you reserved 2 nodes that have 8 cores each, you could run up to 16 pvserver tasks. This number is limited by your current job's node allocation. You must specify the number of pvserver tasks you want to run. Run the LLNL pvserver_parallel script to start the desired pvserver tasks. Once the nodes are reserved, you will be logged into an interactive command line on the first node in the batch job. Please review the LC documentation regarding banks, allocations, and jobs. For more in-depth information, you can type man salloc or view the online Slurm documentation. To see a list of available options for this command simply type salloc -h or lalloc -h on the command line. # Requests 4 nodes for at least 2hrs 30min, charged to the "foo" account You can (and should) use the various option flags to specify details about the job such as the number of nodes, time limits, the partition of nodes to use, and the account(/bank) to charge. This is how you reserve one or more batch nodes to dedicate to your pvserver task(s). On an LC login node, use the appropriate command to request an interactive job allocation ( salloc for systems using Slurm, lalloc for LSF).

Paraview mpi install#

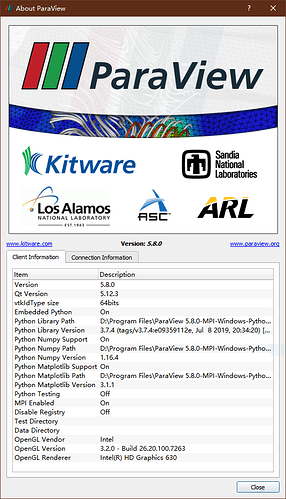

If the versions do not match, you should either load a different ParaView module on the cluster ( see our main ParaView page), or install a different version of ParaView on your desktop (multiple versions can exist simultaneously). On the cluster, you can run pvserver -V to retrieve the version information. If you aren't sure what version you are using, you can open the Help > About menu item within the ParaView application.

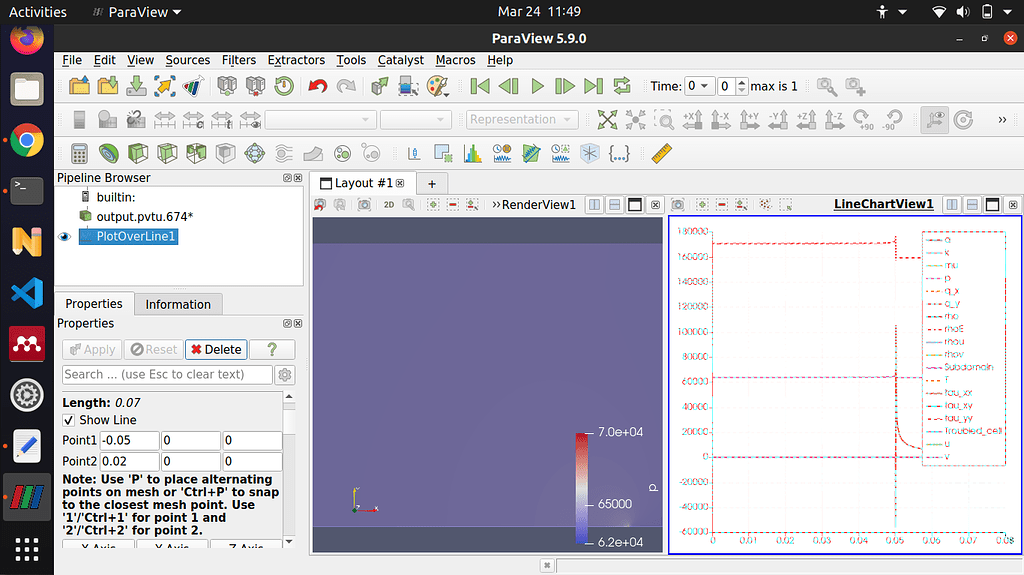

Your desktop installation of ParaView should match the version you are running on the LC cluster. The instructions below will walk you through this setup. Because of the firewalls at LLNL, you will have to establish an SSH tunnel to carry the data from the "listening server" to the client on your desktop. The heavy lifting is done on batch nodes of an LC cluster. The client runs on your local desktop and uses your graphics card to display images quickly on your computer. The figure above shows a pictorial representation of ParaView as run in parallel at LLNL.

0 kommentar(er)

0 kommentar(er)